Kubernetes Best Practices: Mastering Container Orchestration

Explore the essential Kubernetes best practices for deploying and managing cloud-native applications. This comprehensive guide covers Kubernetes architecture, resource management, security, scaling, and deployment strategies.

Table of Contents

Introduction to Kubernetes Best Practices

Kubernetes, or K8s, is a powerful container orchestration platform that automates deploying, managing, and scaling containerized applications. Implementing Kubernetes best practices is crucial for optimizing performance, reliability, and security in cloud-native environments.

Kubernetes Architecture Best Practices

Design for High Availability

Ensuring high availability in your Kubernetes architecture is vital. Follow these Kubernetes best practices:

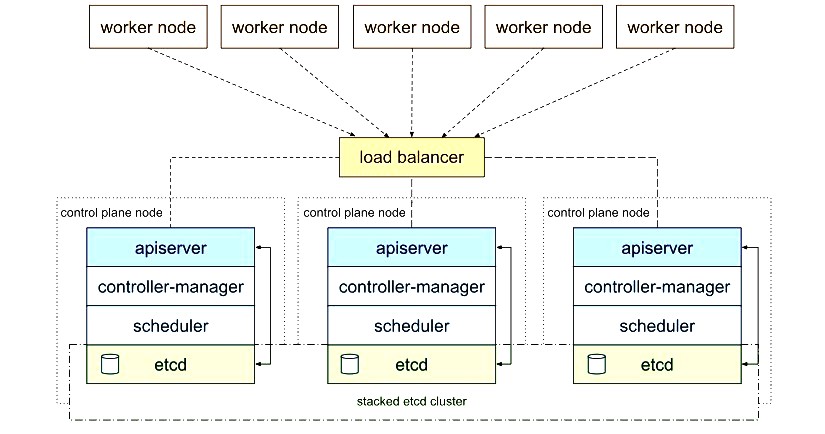

- Multiple Master Nodes: Run multiple master nodes to eliminate single points of failure.

- Distributed etcd: Use a distributed etcd cluster to store configuration data, maintaining consistency across your master nodes.

- Load Balancers: Deploy load balancers to distribute traffic across your nodes.

Choose the Right Instance Types

Optimizing instance types is a key part of Kubernetes best practices for optimizing performance and cost:

- CPU and Memory: Choose instances that match your application's CPU and memory requirements.

- Storage Optimization: Consider storage-optimized instances if your applications have high I/O requirements.

- Spot Instances: Use spot instances for non-critical workloads to reduce costs.

Utilize Node Pools

Node pools allow you to manage groups of nodes with similar configurations. Follow these Kubernetes best practices:

- Workload Separation: Create separate node pools for different types of workloads, such as stateless applications, stateful applications, and batch jobs.

- Resource Optimization: Assign different resource requests and limits for each node pool.

Kubernetes Resource Management

Set Resource Requests and Limits

Properly configured resource requests and limits are fundamental Kubernetes best practices. It ensures that your applications have the resources they need without starving other applications:

apiVersion: v1

kind: Pod

metadata:

name: dbdocs-pod

spec:

containers:

- name: dbdocs-container

image: dbdocs-image

resources:

requests:

memory: "256Mi"

cpu: "500m"

limits:

memory: "512Mi"

cpu: "1000m"

Leverage Kubernetes Namespaces

Namespaces provide a mechanism to isolate resources within a cluster. Follow these Kubernetes best practices:

- Separation of Concerns: Use namespaces to segregate environments, teams, or applications.

- Resource Quotas: Limit resource consumption per namespace using resource quotas.

Implement Resource Quotas and Limits

Resource quotas are essential for managing cluster-wide resources:

apiVersion: v1

kind: ResourceQuota

metadata:

name: dbdocs-quota

namespace: dbdocs-namespace

spec:

hard:

pods: "10"

requests.cpu: "4"

requests.memory: "8Gi"

limits.cpu: "10"

limits.memory: "16Gi"

Application Deployment on Kubernetes

Use Declarative Configurations

Declarative configurations are vital Kubernetes best practices for deployment management:

- YAML/JSON Configuration Files: Use YAML or JSON to define Kubernetes resources. Declarative configuration ensures that your cluster state matches the desired state defined in your configuration files.

- Version Control: Store your configuration files in a version control system (e.g., Git) for easy tracking and rollback of changes.

Adopt a GitOps Approach

GitOps is a practice that uses Git repositories to manage Kubernetes resources:

- Continuous Delivery: Automatically apply changes to your cluster when code is pushed to your Git repository.

- Auditability: All changes to your cluster are tracked in your Git history, providing a clear audit trail.

Rolling Updates and Rollbacks

Use rolling updates to deploy new versions of your applications with zero downtime:

- Gradual Deployment: Update a few pods at a time, allowing your application to continue serving traffic during the update.

- Easy Rollback: Rollback to a previous version if issues arise during the update.

kubectl set image deployment/dbdocs-deployment dbdocs-container=new-image:tag

kubectl rollout status deployment/dbdocs-deployment

kubectl rollout undo deployment/dbdocs-deployment

Kubernetes Networking Best Practices

Design for Scalability and Performance

Kubernetes networking must be optimized for scalability and performance:

- Service Mesh: Implement a service mesh like Istio for traffic management and security.

- Network Policies: Use network policies to control inter-pod communication.

- Load Balancers: Deploy external load balancers for efficient traffic distribution.

Implement Network Policies

Network policies are vital Kubernetes best practices for controlling traffic flow between pods and external resources:

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: dbdocs-network-policy

namespace: dbdocs-namespace

spec:

podSelector:

matchLabels:

app: dbdocs

policyTypes:

- Ingress

- Egress

ingress:

- from:

- podSelector:

matchLabels:

app: frontend

Implement Ingress Controllers

Ingress controllers manage external access to your services, providing load balancing and SSL termination:

- SSL Termination: Terminate SSL connections at the ingress controller, reducing the load on your application pods.

- Path-Based Routing: Route traffic to different services based on the request path.

Kubernetes Security Best Practices

Implement Role-Based Access Control (RBAC)

RBAC is a cornerstone of Kubernetes best practices for security that manages user and application permissions within the cluster:

- Granular Permissions: Define roles with minimal required permissions.

- Namespace Isolation: Use RBAC to enforce isolation between namespaces.

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

namespace: dbdocs-namespace

name: dbdocs-role

rules:

- apiGroups: [""]

resources: ["pods"]

verbs: ["get", "list", "watch"]

Pod Security Policies

Pod Security Policies (PSPs) control the security settings of pods:

- Restrict Capabilities: Limit container privileges, preventing access to sensitive resources.

- Validate Configuration: Ensure pods adhere to security standards, such as using non-root users and read-only file systems.

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: restricted-psp

spec:

privileged: false

allowPrivilegeEscalation: false

requiredDropCapabilities:

- ALL

runAsUser:

rule: MustRunAsNonRoot

fsGroup:

rule: RunAsAny

volumes:

- 'configMap'

Secure Secrets Management

Managing secrets is critical for Kubernetes security:

- Encryption: Enable encryption at rest for secrets stored in etcd.

- Environment Variables: Inject secrets into containers using environment variables or mounted volumes.

apiVersion: v1

kind: Secret

metadata:

name: dbdocs-secret

namespace: dbdocs-namespace

type: Opaque

data:

username: YWRtaW4=

password: MWYyZDFlMmU2N2Rm

Audit and Monitor Kubernetes Clusters

Continuous monitoring is among the top Kubernetes best practices:

- Audit Logs: Enable audit logging to track API interactions.

- Monitoring Tools: Use tools like Prometheus and Grafana for cluster health and performance monitoring.

Kubernetes Monitoring and Logging

Use Prometheus and Grafana

Prometheus and Grafana provide powerful monitoring and visualization capabilities:

- Metrics Collection: Use Prometheus to collect metrics from your applications and Kubernetes components.

- Dashboards: Create Grafana dashboards to visualize metrics and gain insights into your cluster's performance.

Centralized Logging

Centralized logging solutions help aggregate and analyze logs from multiple sources:

- Elastic Stack: Use Elasticsearch, Logstash, and Kibana (ELK) to collect, process, and visualize logs.

- Fluentd: Implement Fluentd or Fluent Bit to forward logs from Kubernetes nodes and applications to a central logging system.

- Kibana: Use Kibana for log visualization.

- Logstash: Process and transform log data.

Kubernetes Scaling and Performance

Auto-Scaling Best Practices

Horizontal Pod Autoscaling

Horizontal Pod Autoscaling automatically adjusts the number of pods based on resource utilization:

- CPU and Memory: Scale pods based on CPU or memory usage, ensuring that your applications have the necessary resources.

- Custom Metrics: Use custom metrics to scale pods based on application-specific indicators.

apiVersion: autoscaling/v2

kind: HorizontalPodAutoscaler

metadata:

name: dbdocs-hpa

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: dbdocs-deployment

minReplicas: 2

maxReplicas: 10

metrics:

- type: Resource

resource:

name: cpu

target:

type: Utilization

averageUtilization: 50

Cluster Autoscaling

Cluster autoscaling automatically adjusts the number of nodes in your cluster based on resource demands:

- Node Pools: Use node pools to scale specific types of nodes independently, optimizing resource allocation.

- Cost Management: Autoscaling helps manage costs by adding nodes only when needed and removing them during low-demand periods.

Optimize Resource Allocation

Optimizing resources is crucial for Kubernetes best practices:

- Right-Sizing: Continuously right-size pods based on workload patterns.

- Node Management: Regularly manage and scale nodes for optimal performance.

Conclusion: Implementing Kubernetes Best Practices

Adopting Kubernetes best practices is essential for optimizing your cloud-native applications. From architecture design to security, resource management, and scaling, these practices ensure that your Kubernetes environment is efficient, secure, and scalable.

Related content